Machine Learning in Project Management

Between 2025 and 2030, estimation and scheduling will stop being “expert guesses with templates” and become living systems that learn from real delivery signals. Teams that keep relying on static plans will get crushed by budget pressure, resource volatility, and reorg driven speed demands. Machine learning will not magically fix messy projects. It will expose what is already broken. Bad inputs will produce confident lies. But used correctly, ML turns uncertainty into ranges, flags schedule risk early, and helps PMs defend decisions with evidence, not optimism.

1) Why estimation and scheduling are breaking right now

Most project schedules fail for the same reason: they are built to look certain, not to be true. A plan that “aligns stakeholders” but cannot survive the first wave of changes is not a plan. It is a comfort document. That weakness becomes lethal in environments shaped by cost pressure like global inflation impacts on project budgets and efficiency driven staffing shifts like PM structures reshaped for efficiency. When leadership compresses teams through moves like middle management reductions, the tolerance for schedule drift disappears.

Here is the uncomfortable truth that future PMs must accept: estimation is rarely a math problem. It is a data and incentives problem. You see it when stakeholders push for an aggressive date, then punish the PM for missing it. You see it when teams inflate buffers, then get forced to “remove padding,” then burn out anyway. You see it when scope quietly expands and the schedule stays frozen. These dynamics get worse during reorganizations where leaders implement new project management frameworks amid reorgs and shift accountability to smaller groups.

Machine learning matters because it changes the fight. It moves planning from arguments to evidence. It also forces you to standardize language and definitions. If your org cannot even agree on “done,” your ML models will learn nonsense. That is why strong foundations like project initiation terms every PM must know and shared vocabulary from top project management terms you must know are not basic. They are prerequisites for data driven planning.

From 2025 to 2030, the most valuable PMs will be the ones who can combine three capabilities:

Financial clarity, using the language in essential project budgeting terms and decision friendly cost framing from top cost management terms for project managers.

Risk literacy, using disciplined thinking from the project risk management glossary and practical grounding from risk identification and assessment terms.

Tooling reality, understanding why organizations invest in systems during project management software surges and scale new workflows through digital transformation across PMOs.

ML does not replace those fundamentals. It amplifies them. If your governance is weak, ML makes the damage faster. If your governance is strong, ML makes your delivery harder to disrupt.

2) What ML actually changes in estimation and scheduling

Machine learning changes two things that traditional planning tools struggle with: uncertainty and learning. Classic scheduling assumes a task duration and then chains tasks together. ML treats duration as a probability distribution that updates as new data arrives. That is a massive shift in governance because it replaces false certainty with defendable ranges.

It kills the single number lie

If your plan says a milestone is “June 15,” you are pretending the world is deterministic. ML driven estimation forces you to answer the real questions: what is the P50 date, what is the P80 date, and what conditions change that distribution. This aligns directly with decision behavior under pressure like project budgets adapting to inflation because leadership does not need poetry. Leadership needs confidence levels and trade offs.

To make ranges useful, you need consistent definitions, which is why the discipline in project initiation terminology and a shared vocabulary like must know PM terms directly affects estimation quality. Without common definitions, your “actuals” are not real actuals. They are different people recording different truths.

It learns from delivery behavior, not planning behavior

Traditional estimates are biased by politics and optimism. ML learns from observed outcomes, especially if you integrate data from tools that organizations are rapidly adopting, visible in investment surges in project management software and the broader move toward digital PMO transformation. That matters because tool adoption creates more data, and more data creates better models, if you clean it.

This also connects to workforce dynamics. In faster, flatter orgs shaped by efficiency reshaping of PM structures, you do not get unlimited time to “manually manage” schedule conflicts. ML based signals help a smaller PM function manage more complexity with less noise.

It turns risk into something measurable

Most PMs talk about risk in vague terms, then act surprised later. ML allows you to quantify risk as probabilities tied to real indicators. That aligns with disciplined language from the risk management glossary and practical alignment via risk identification and assessment terms. When risk becomes measurable, it becomes governable.

It tightens the link between schedule and cost

The future reality is that schedules are budgets. Every extra week has burn implications. ML systems can forecast cost at completion bands by combining timeline probability with spend trends. This makes you dramatically stronger in executive conversations because you are using the language from essential project budgeting terms and connecting it to schedule outcomes with the framing in cost management terms PMs use.

3) The ML methods that will dominate estimation and scheduling from 2025 to 2030

You do not need to become a data scientist to lead ML enabled planning. You need to understand what the methods do, what data they need, and what failure modes they create.

1) Probabilistic forecasting becomes the standard

From 2025 to 2030, range based forecasting will become expected, especially in environments that must justify spending under economic pressure that increases agile demand and tighter governance structures in new frameworks during reorganizations. Probabilistic models output distributions, not points. That is what enables P50 and P80 planning.

What PMs must enforce:

A rule that forecasts are always ranges

A rule that commitments are tied to confidence levels

A rule that the model must be calibrated using actuals

Calibration is the difference between a useful forecast and a confident lie. This is where risk discipline from the risk management glossary becomes practical.

2) Similarity modeling for early estimation

Early phase estimation usually has the worst uncertainty. ML helps by comparing a new initiative to historical work that looks similar. This requires clean taxonomy and clear scope definitions, which is why initiation clarity from project initiation terms matters. If past projects were labeled inconsistently, similarity will be garbage.

The PM upgrade is to standardize:

Work types and categories

Complexity indicators

Definition of done signals

This becomes easier when teams share language from top PM terms and cost framing from project budgeting terms.

3) Graph and dependency intelligence

Scheduling fails at interfaces. ML that models dependencies as graphs can flag where handoffs break, where ownership is unclear, and where risk concentrates. This becomes even more relevant as portfolios expand with tech adoption and complexity, visible in digital transformation across PMOs and emerging tool adoption topics like blockchain applications in project management.

The governance move that makes this work: treat dependencies as first class objects, not footnotes.

4) NLP for blocker and change request intelligence

A large chunk of schedule risk sits in unstructured text: issue descriptions, change requests, meeting notes. NLP can classify blockers, extract decisions, and predict recurring failure patterns. This aligns with a world where AI is increasingly embedded in PM workflows, highlighted by record levels of AI adoption in project management.

The PM must protect signal quality:

Force consistent issue tagging

Require clear acceptance criteria

Use common risk language from the risk glossary

5) Security and tooling risk as schedule risk

From 2025 onward, security events and compliance gates increasingly disrupt timelines. ML systems that detect anomalies and predict gate readiness reduce late surprises. This is not theoretical. It is reflected by real pressure such as cybersecurity concerns prompting PM software overhauls. If your schedule ignores security constraints, your schedule is fantasy.

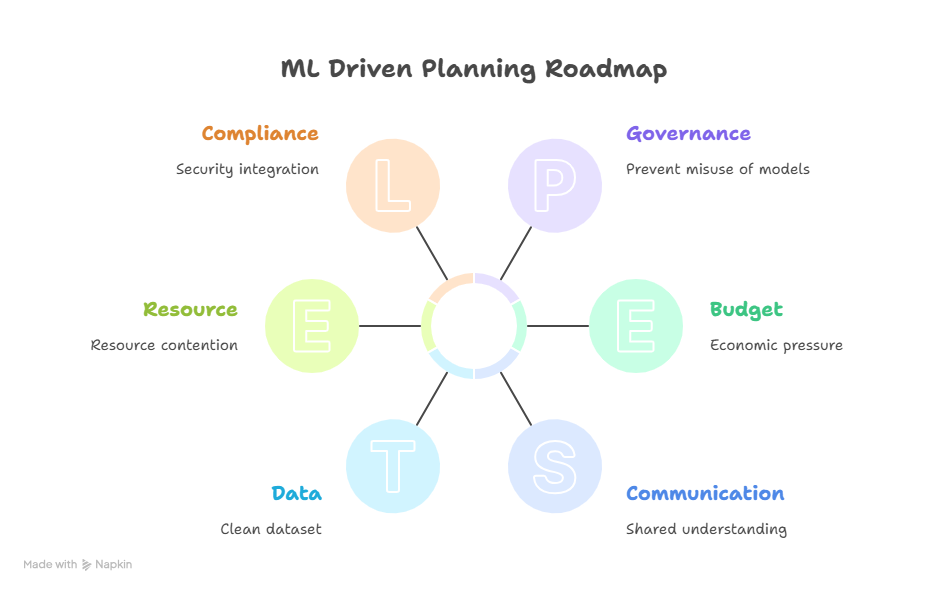

4) A practical implementation roadmap for ML driven planning

Most ML initiatives fail because they start with models instead of governance. The roadmap that works starts with definitions, then data, then pilots, then scale. This is the same logic that organizations follow when modernizing delivery tooling through PM software investments under economic pressure and expanding capability during digital transformation across PMOs.

Step 1: Fix your planning language before you fix your predictions

If “done” means different things across teams, your actuals are unreliable. Standardize terminology using project initiation terms and reinforce a shared baseline with top PM terms. Then codify definitions in your templates so the system stays consistent.

Step 2: Build a clean dataset from operational truth, not opinions

Your best data often lives in task tools, version control, ticket systems, and finance trackers. Tie this to cost vocabulary from essential project budgeting terms so estimates connect to funding logic. Track planned vs actual, scope changes, blockers, and dependency churn. If you skip this, your models will learn the wrong thing.

Step 3: Start with one high value pilot that leaders actually care about

Good pilots are not “cool.” They are painful problems with visible upside:

Forecasting milestone confidence bands

Predicting schedule slip risk

Modeling resource contention windows

Pick one and measure improvement with simple metrics like forecast accuracy and early warning lead time. Align your framing with risk language from the risk glossary so leaders interpret outputs correctly.

Step 4: Put governance around the model to prevent misuse

This is where projects collapse if you are careless. Without guardrails, teams will weaponize ML outputs to force impossible deadlines. Build governance that includes:

Confidence bands required for commitments

Clear thresholds for escalation

Bias monitoring to catch systematic underestimation

This becomes more important in environments that are already high pressure due to inflation and budget scrutiny and operational compression like efficiency led PM structure changes.

Step 5: Integrate security and compliance gating early

If security freezes delivery late, your forecast is useless. Incorporate constraints and checkpoints with awareness drawn from cybersecurity concerns prompting tool overhauls. Treat security readiness as a schedule variable, not a last week checklist.

5) The hidden traps that will make ML estimation fail

The biggest danger is not “bad accuracy.” It is false confidence. A bad model that looks confident destroys trust faster than a human estimate because it feels objective.

Trap 1: Garbage actuals

If teams backfill tasks late or close items without real completion criteria, your model learns fantasy. Fix this with initiation discipline from project initiation terms and operational standardization that aligns with digital PMO transformation.

Trap 2: Incentives that punish honesty

If people get punished for realistic ranges, they will pressure the model outputs. You must anchor commitments to budget logic, using language from project budgeting terms and cost management terms. When leaders see the cost of date fantasy, the culture shifts.

Trap 3: Confusing agile with unstructured

Agile reduces planning horizon. It does not remove discipline. If you want to operate in uncertainty, learn why agile demand rises under economic uncertainty and apply the same control mindset you would use in any risk sensitive environment, using the risk management glossary.

Trap 4: Tool fragmentation and integration gaps

Many orgs collect data across disconnected systems. That is why investment trends like PM software surges are tied to governance needs, not aesthetics. Your ML system needs a single source of truth, even if it is a lightweight layer.

Trap 5: Ignoring emerging tech dependencies

New tooling introduces new dependencies. If your portfolio experiments with advanced technologies, learn to treat them as schedule risk drivers, similar to how blockchain applications in project management add interface complexity. This is not about hype. It is about integration reality.

6) FAQs: Machine Learning in Project Estimation and Scheduling

-

The biggest benefit is replacing single number estimates with probability based ranges. That shift makes planning honest and defendable under pressure like inflation impacts on project budgets. When you can state P50 and P80 dates and explain the drivers using the risk management glossary, stakeholders stop fighting about feelings and start choosing trade offs.

-

ML will automate repetitive scheduling work and surface risks faster, but it will not replace governance, decision design, and stakeholder management. The PM who understands cost language from essential project budgeting terms and risk framing from risk identification terms will become more valuable because ML gives them sharper evidence. The PM who only updates timelines will be replaced first.

-

You need planned vs actual durations, scope change records, resource allocation, dependency structures, and blocker histories. You also need clean definitions, built on project initiation terms and shared language from top PM terms. Without consistent “done” criteria, your actuals are inconsistent, and your model learns noise.

-

You set governance rules: always present confidence bands, tie commitments to probability levels, and document assumptions. Anchor trade offs to cost impact using cost management terms for PMs and budget framing from project budgeting terms. This protects the model from becoming a weapon for date fantasy and keeps trust intact.

-

ML improves schedule risk by detecting leading indicators earlier than humans. It can flag patterns like rising blocker frequency, dependency churn, or resource contention. Then you map those to structured risk responses using the project risk management glossary and the framework in risk identification terms. The win is not prediction. The win is earlier action.

-

Start with a single project line where outcomes matter and data exists. Implement probabilistic milestone forecasts and schedule risk scoring. Use tooling momentum described in PM software investment surges and workflow maturity from digital PMO transformation. Measure forecast accuracy and the time between risk detection and mitigation. If you cannot show those two improvements, the pilot will be dismissed.

-

Treat security and compliance as schedule variables, not end phase gates. Add readiness checkpoints, track audit risks, and monitor tool integrity, especially in environments affected by cybersecurity concerns prompting tool overhauls. A schedule that ignores security is a schedule that will break late, when fixes are most expensive.